Something we've been asked a number of times during HUB Q&A series is, "Why doesn't Intel develop their own kind of 3D V-Cache?" It's a thought-provoking question, especially given how beneficial 3D V-Cache has been for AMD's Ryzen chips, despite some drawbacks.

Those drawbacks include decreased core clock speeds, higher power usage, and higher operating temperatures. In the case of Ryzen CPUs, the clock speed reduction isn't significant since those parts aren't clocked very high to begin with, and the performance uplift achieved with the larger L3 cache more than offsets the decrease in clock speed. Power usage isn't a major concern either, and while thermals can be an issue with Ryzen processors, throttling is easy to avoid.

In the case of Intel's CPUs, at least their current 13th and 14th-gen Core series, the power budget is pretty well maxed out. This has been the subject of much discussion over the past few years and recently came to a head.

We also know that Intel relies heavily on clock frequency to get the most out of their parts. So you might be wondering if more L3 cache could solve their issues by reducing clock speeds and therefore power consumption, while also boosting gaming performance beyond what we're currently seeing.

To get an idea of whether more L3 cache would be beneficial, we've rehashed some testing done with the 10th-gen Core series, but using the newer 14th-gen parts (aka Raptor Lake). Essentially, this is a cores vs. cache benchmark.

We took the Core i9-14900K, Core i7-14700K, and Core i5-14600K, disabled the E-cores completely, locked the P-cores at 5 GHz with the Ring Bus at 3 GHz, and then tested three configurations: 8-cores with the 14900K and 14700K, 6-cores with all three processors, and 4-cores with all three processors.

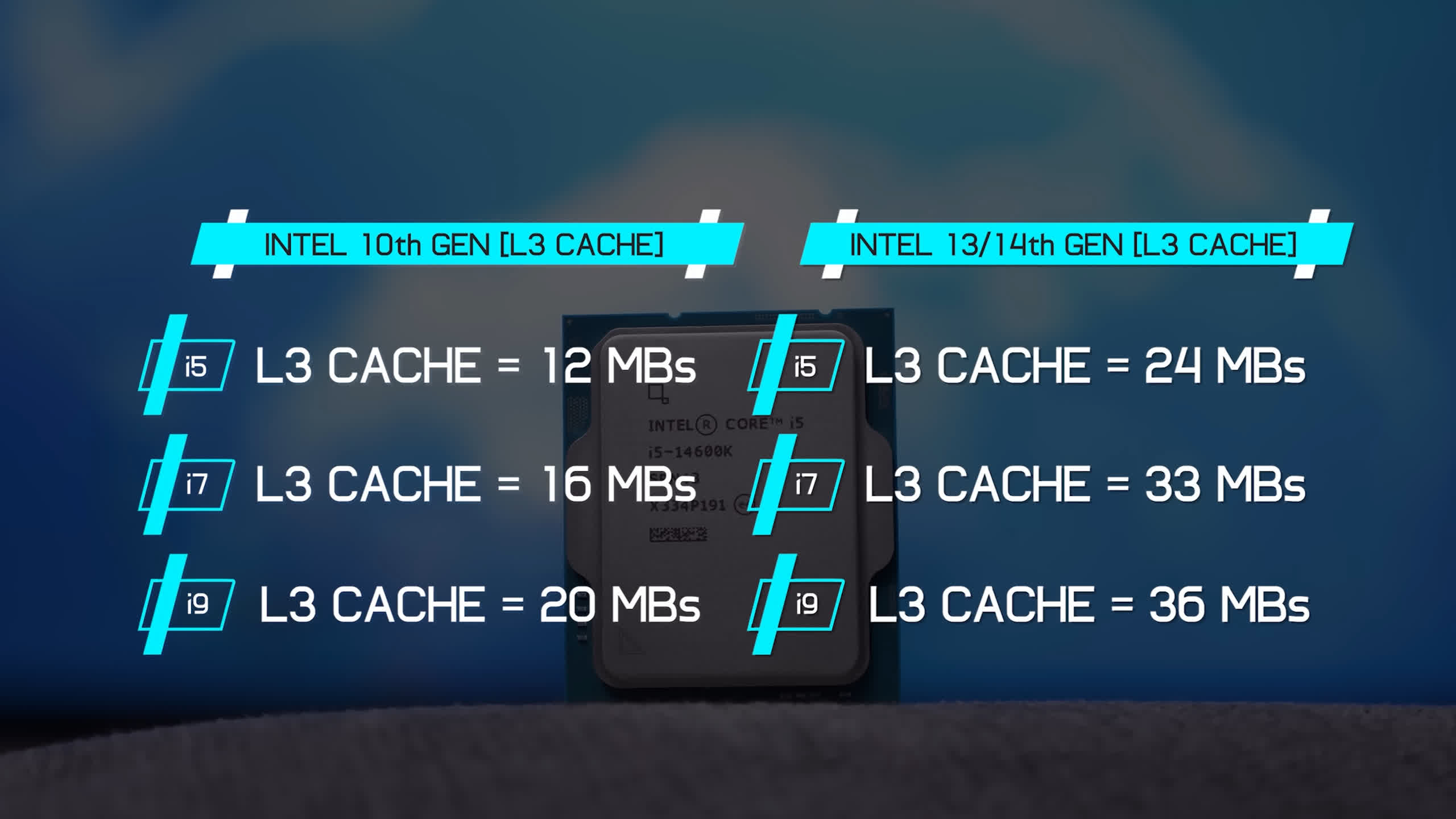

This testing method provided us with great insight into how the 10th-gen series compared and what was responsible for most of the performance uplift in games back then (the answer in that case was L3 cache). However, the 10th-gen series had considerably less L3 cache, just 20 MB for the Core i9's, 16 MB for the i7's, and just 12 MB for the i5's. Fast forward to today, and the 14600K has more L3 cache than the 10900K at 24 MB, while the 14700K has 33 MB, and the 14900K has 36 MB.

The cores themselves are clocked higher, though the configuration here has changed a lot, especially with the E-Cores disabled. Finally, we're also using DDR5-7200 memory and the GeForce RTX 4090, so let's see what the results of the benchmarks look like.

Benchmarks

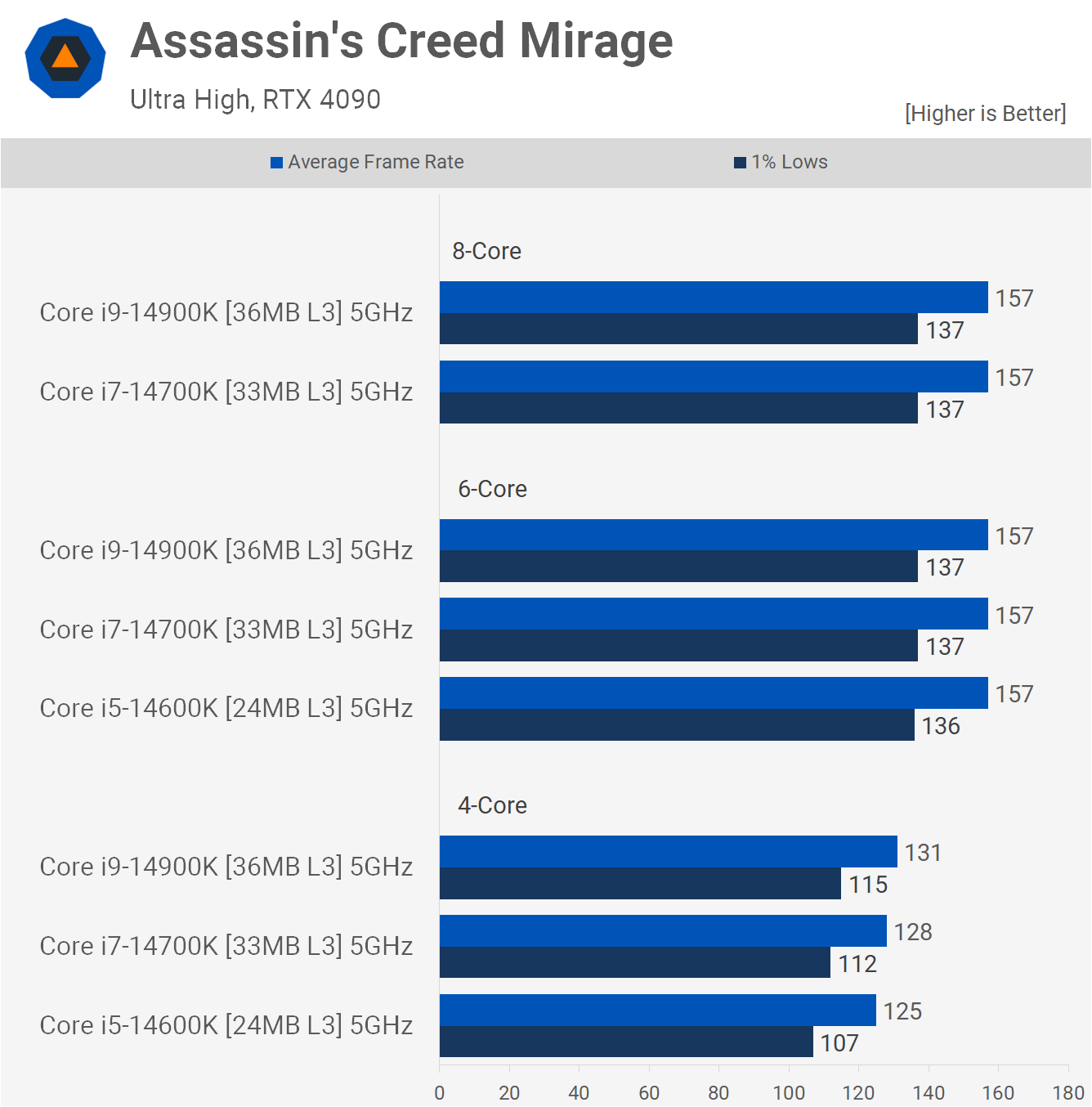

Starting with the Assassin's Creed Mirage results, this data looks nothing like the 10th gen testing we did. The results are very unexpected as they appear CPU-limited, and they indeed are. Yet, adding more cores, from 6 to 8, or increasing the L3 cache capacity doesn't boost performance. Rather, the bottleneck here for the 6 and 8-core configurations appears to be the clock frequency of 5 GHz, suggesting that the primary limitation of the 14th gen architecture is frequency, not L3 cache capacity, at least in this example.

Dropping down to just 4-cores does see performance fall away, and now cache capacity plays a very small role. Still, it's remarkable to see the 14900K drop less than 20% in this test with just half the P-cores active.

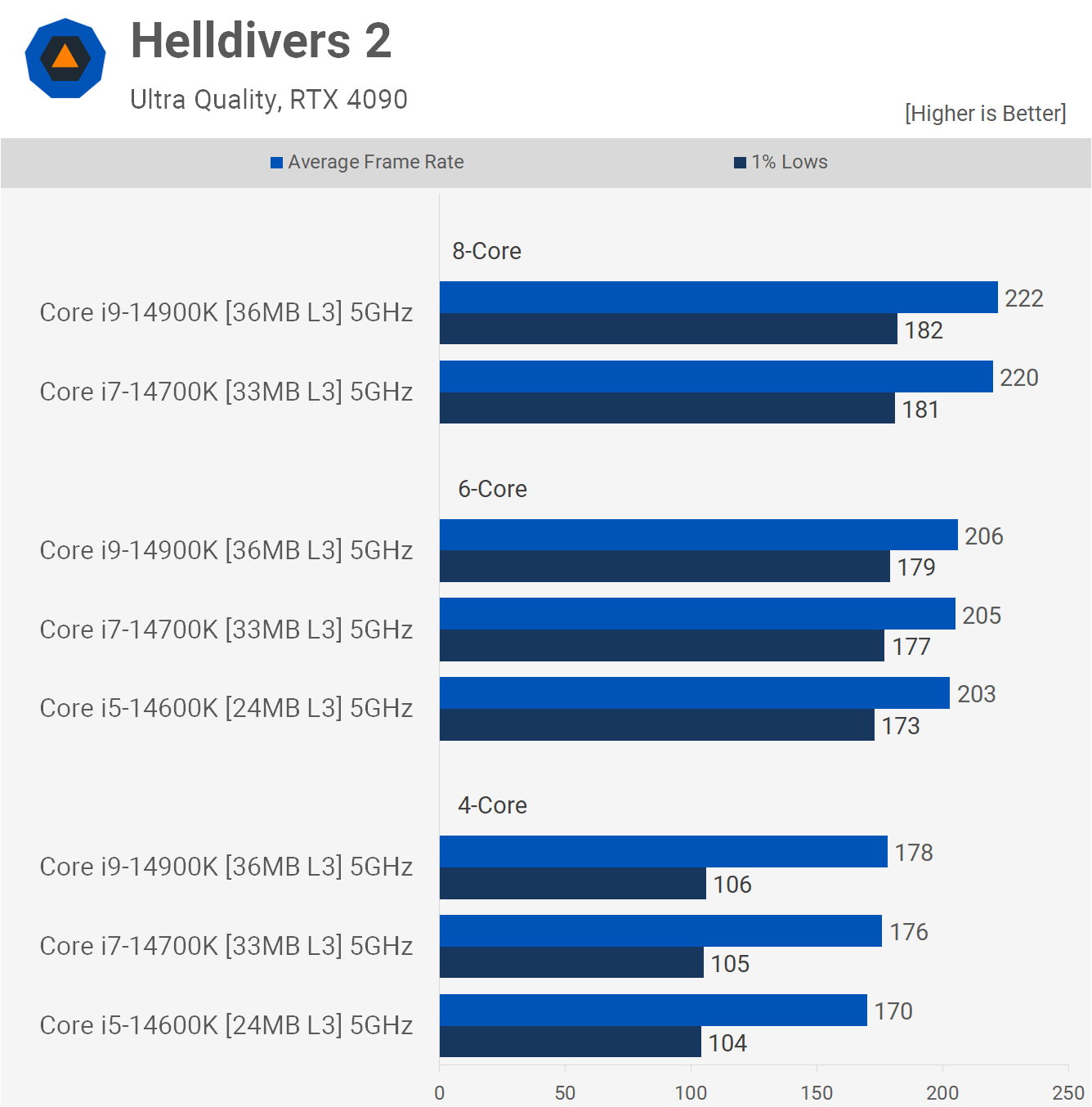

Helldivers 2 does see some performance degradation when dropping from 8 to 6 active cores, though we're only talking about a 7% reduction in the average frame rate with a much smaller decrease in the 1% lows. Dropping to just 4-cores does slash performance, particularly in the 1% lows, which are reduced by a little over 40%, leading to a less than ideal experience.

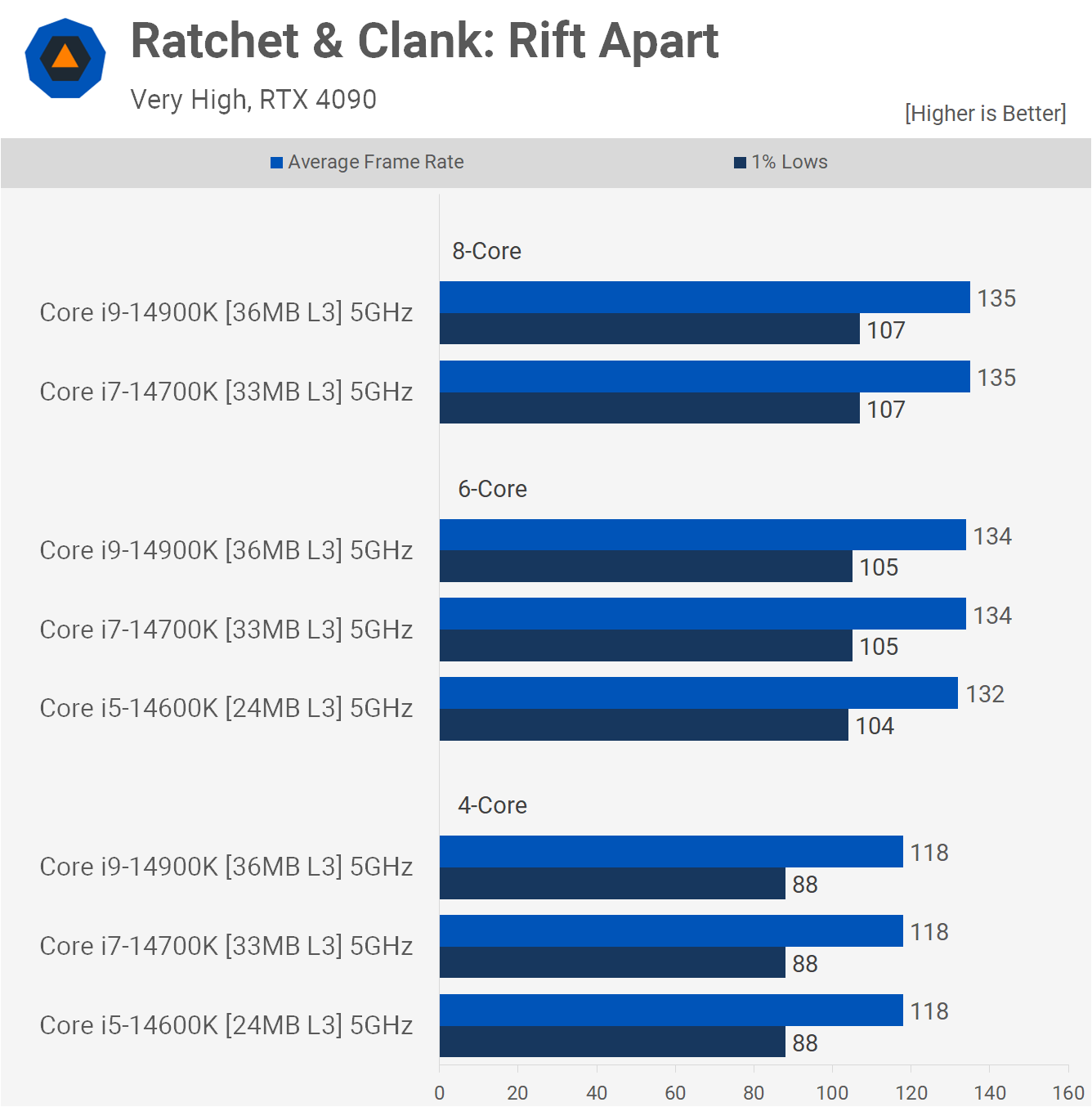

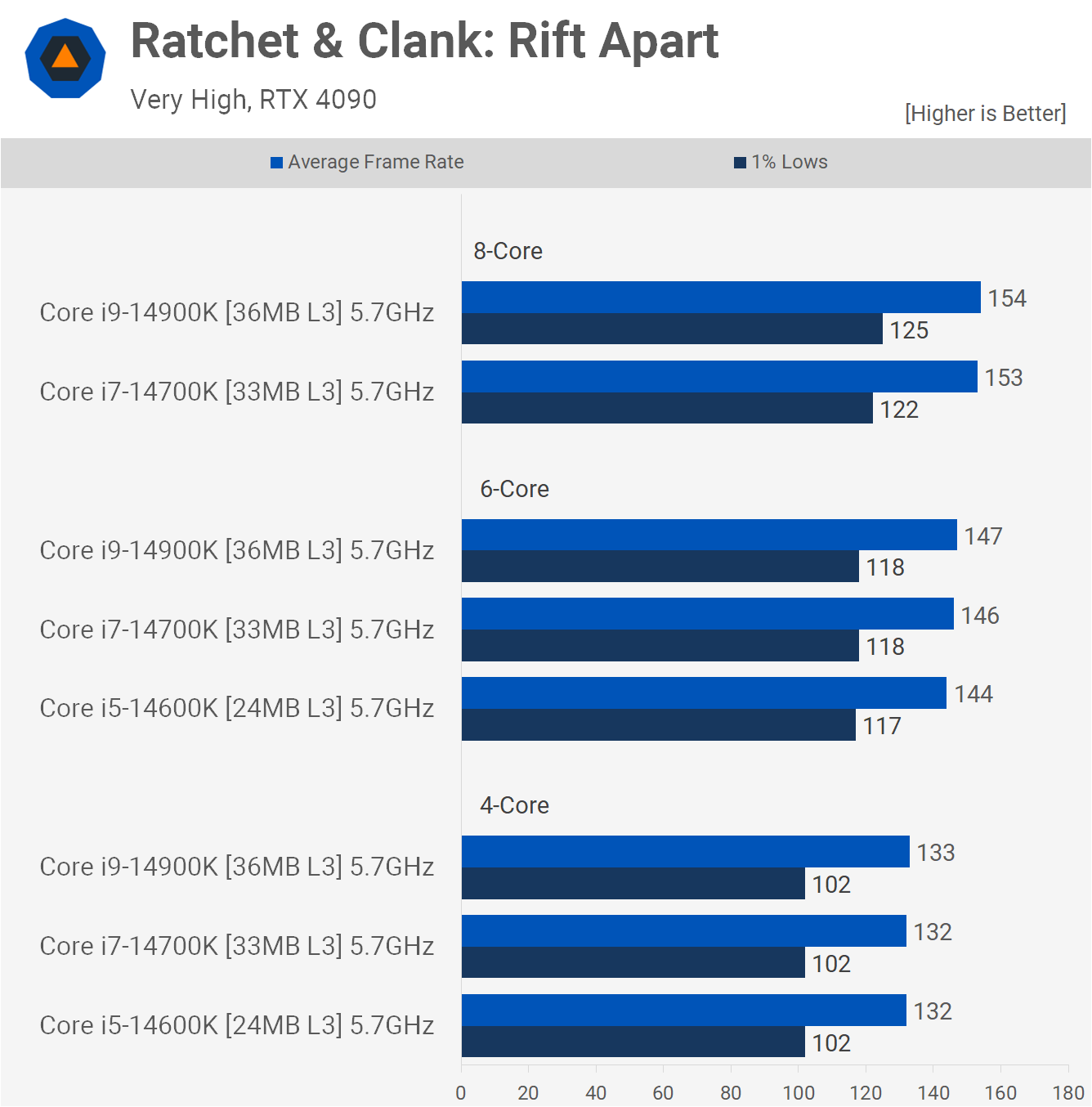

Ratchet & Clank, like Assassin's Creed Mirage, sees almost no difference in performance between the 6 and 8-core configurations, again suggesting that core clock frequency is the primary bottleneck here. It's not until we reduce the active P-core count to just 4 that we see some performance degradation, and the drop-off here is much the same regardless of L3 cache capacity.

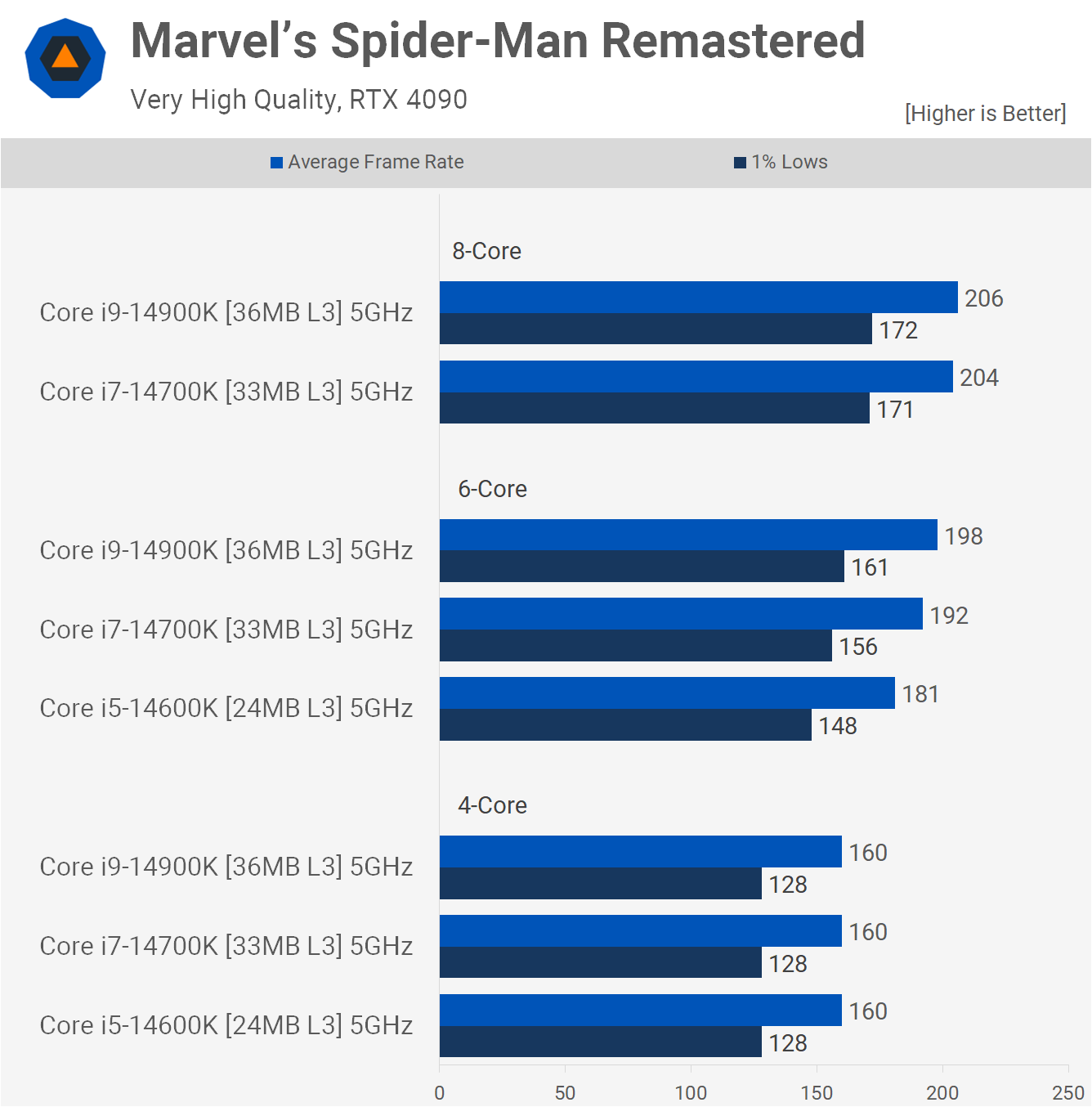

The Spider-Man Remastered results are interesting for a few reasons. Firstly, with 8 active cores, the 14900K and 14700K delivered virtually identical results at 5 GHz, so again in that example, the clock frequency was the primary bottleneck. But when dropping down to 6-cores, it seems cache becomes more important. Though the margins aren't exactly huge, the 14700K was just 3% slower than the 14900K, for example.

Then quite oddly, when dropping down to just 4-cores, the results become frequency limited again, despite performance only dropping by 22% when compared to the 8-core results.

Cyberpunk 2077: Phantom Liberty sees very little difference between the 8-core configurations. The 14700K is again just 3% slower than the 14900K, and we're only seeing a 7% reduction in performance with 6-cores active and then a 22% reduction from 8-cores to just 4-cores. Regardless of how many cores are active, it seems at 5 GHz cache capacity plays a very small role.

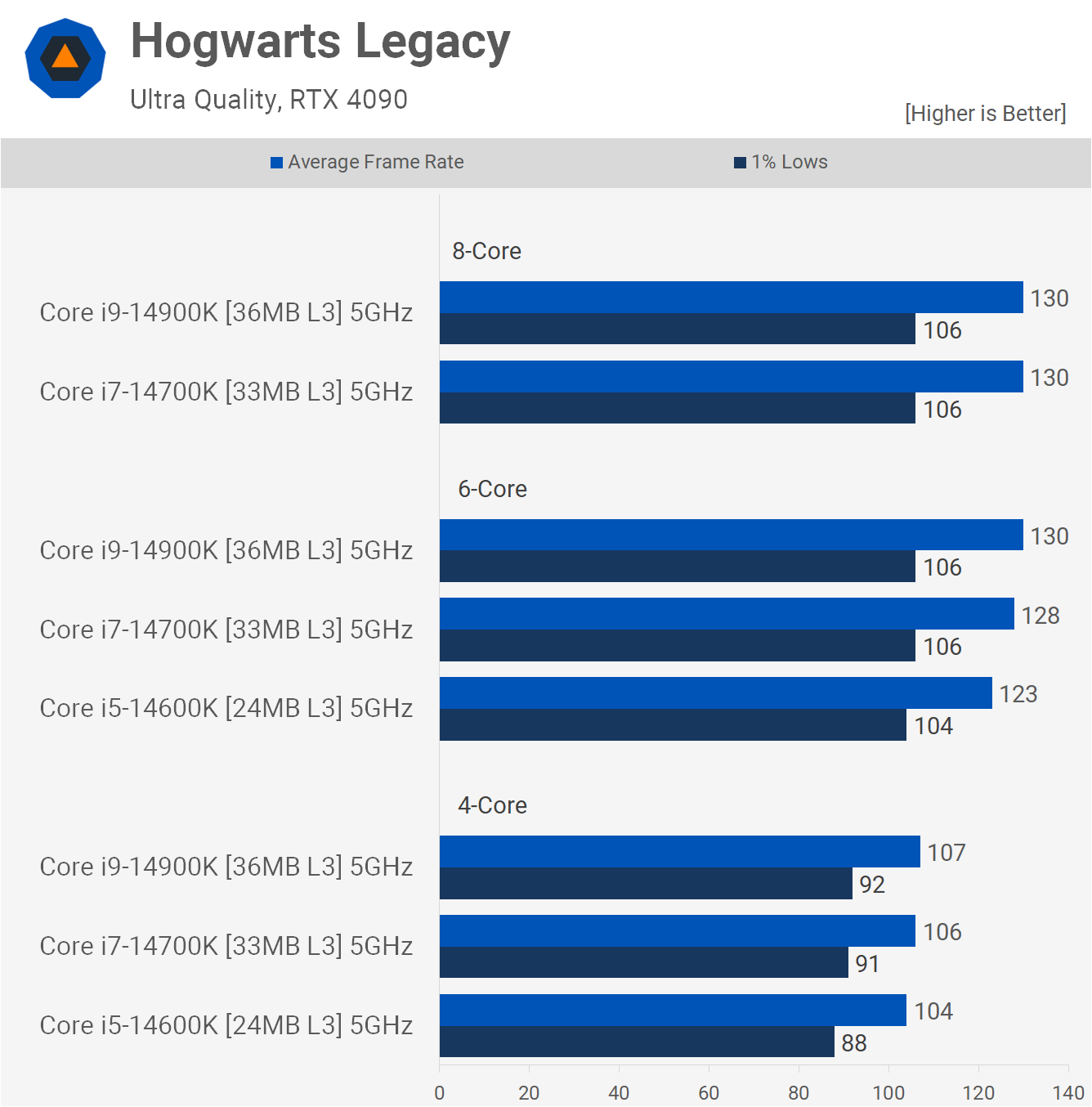

Hogwarts Legacy sees no difference in performance with the 8-core configurations, with a minor variation seen with 6-cores. The difference between the 14600K and 14900K here is just 5%, and it's even less with just 4-cores active. The performance difference between 8 and 6 active cores is also very small, with 4-cores reducing frame rates by just shy of 20%.

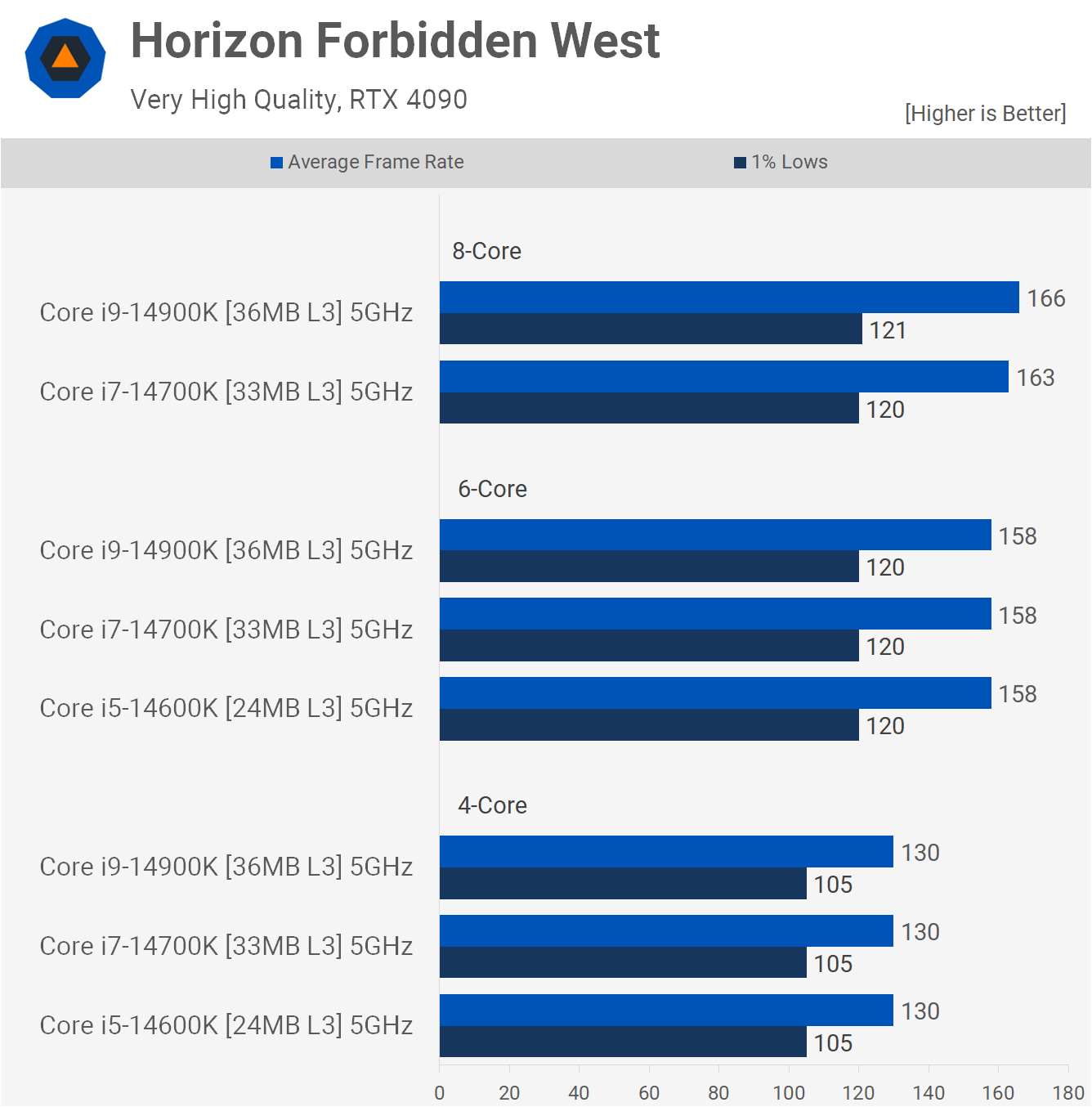

Horizon Forbidden West is another game that appears primarily frequency limited as cache capacity makes no difference here, regardless of the core configuration. Dropping from 8 to 6 cores only reduced the performance of the 14900K by 5%, and then from 8 to 4 cores saw a 22% drop, which is what we've typically come to expect.

Dragon's Dogma II is known to be a very CPU-limited game, but like most of the titles tested, we're not seeing much of a performance improvement when increasing L3 cache capacity. The 14900K, for example, was just 4% faster than the 14700K when all 8 P-cores were active.

With 6-cores, the 14700K was 9% faster than the 14600K, while the 14900K was again 4% faster than the 14700K. Then with just 4-cores active, core count becomes the primary bottleneck.

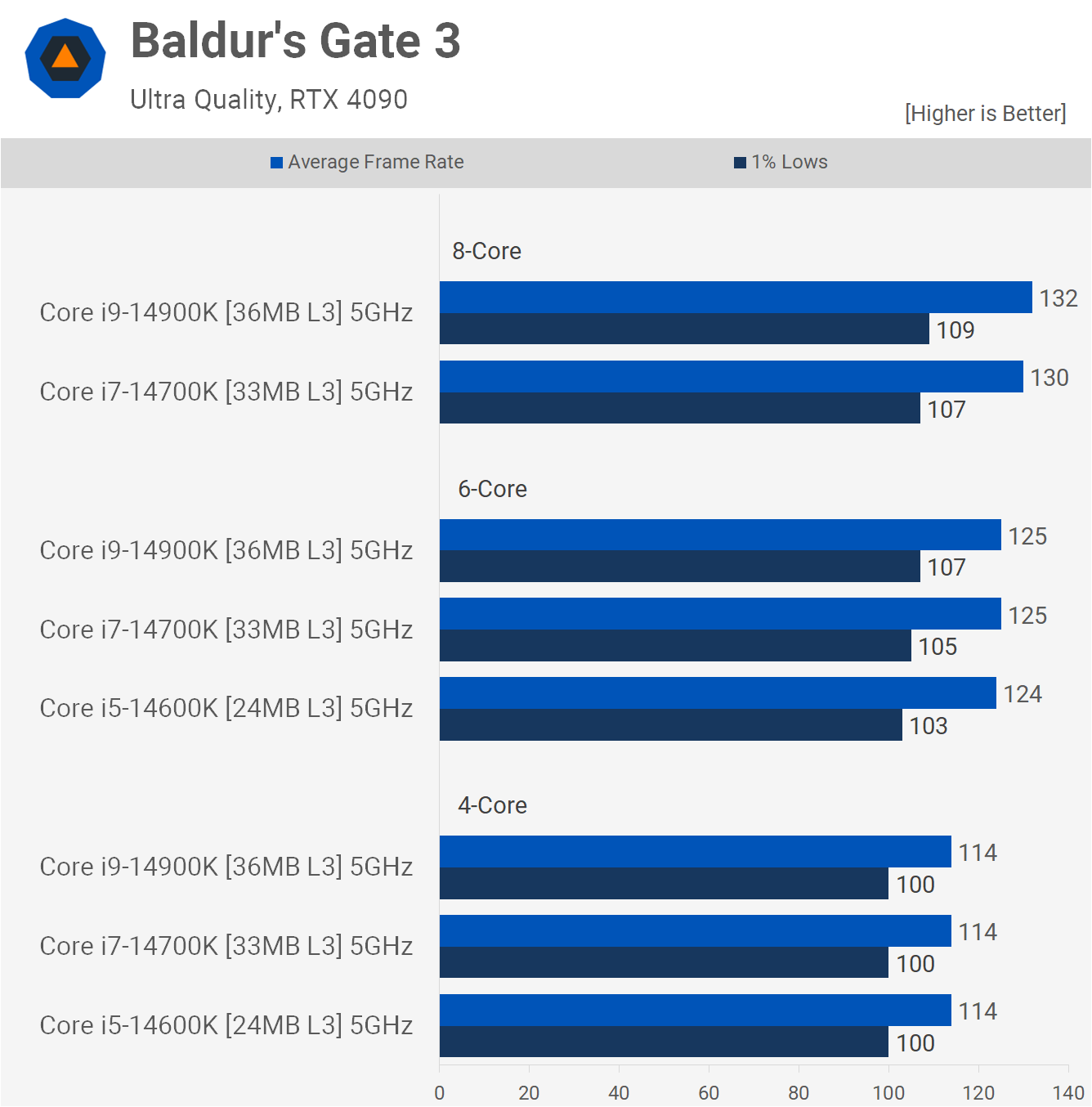

The Baldur's Gate 3 performance was again much the same using either the 14700K or 14900K, and we only saw up to a 5% decrease in performance when dropping down to 6-cores. Then with just 4 active cores, the 14900K was just 14% slower when compared to the 8-core configuration.

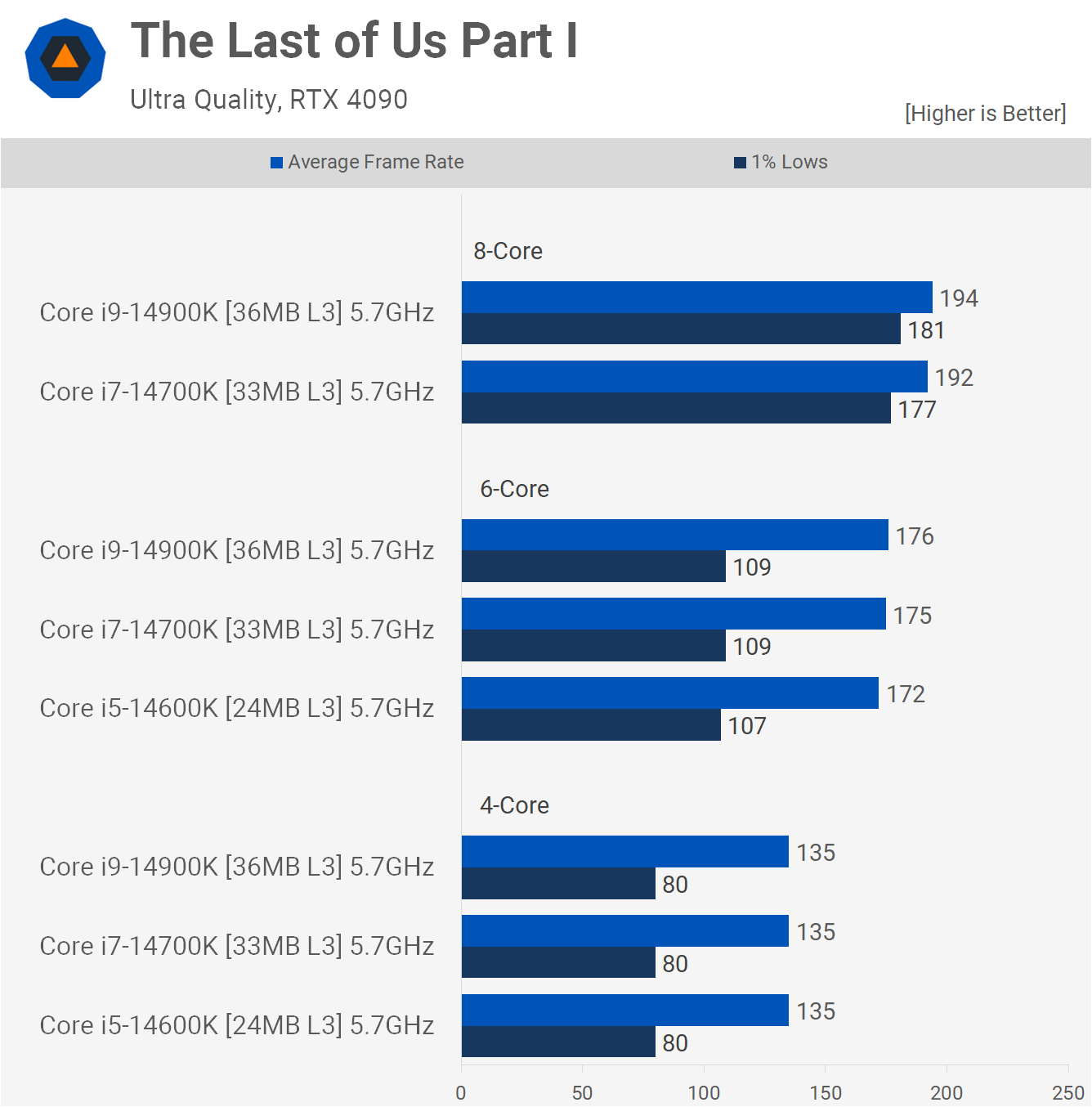

The Last of Us Part I has some really interesting results for us. In this example, we're looking at a 5% performance drop from the 14900K to the 14700K running with 8-cores enabled. But with just 6-cores, that margin is reduced to just 2%, and then nothing with 4-cores. Rather, it's the core count that makes the most difference in this example.

For example, the 14900K saw a 10% reduction in average frame rate when going from 8 to 6 cores and a 36% drop for the 1% lows. Then from 6 to 4 cores, the average frame rate dropped by a further 24%, with a 32% reduction for the 1% lows. So going from 8 active cores to just 4 saw the 1% lows more than halve in this example.

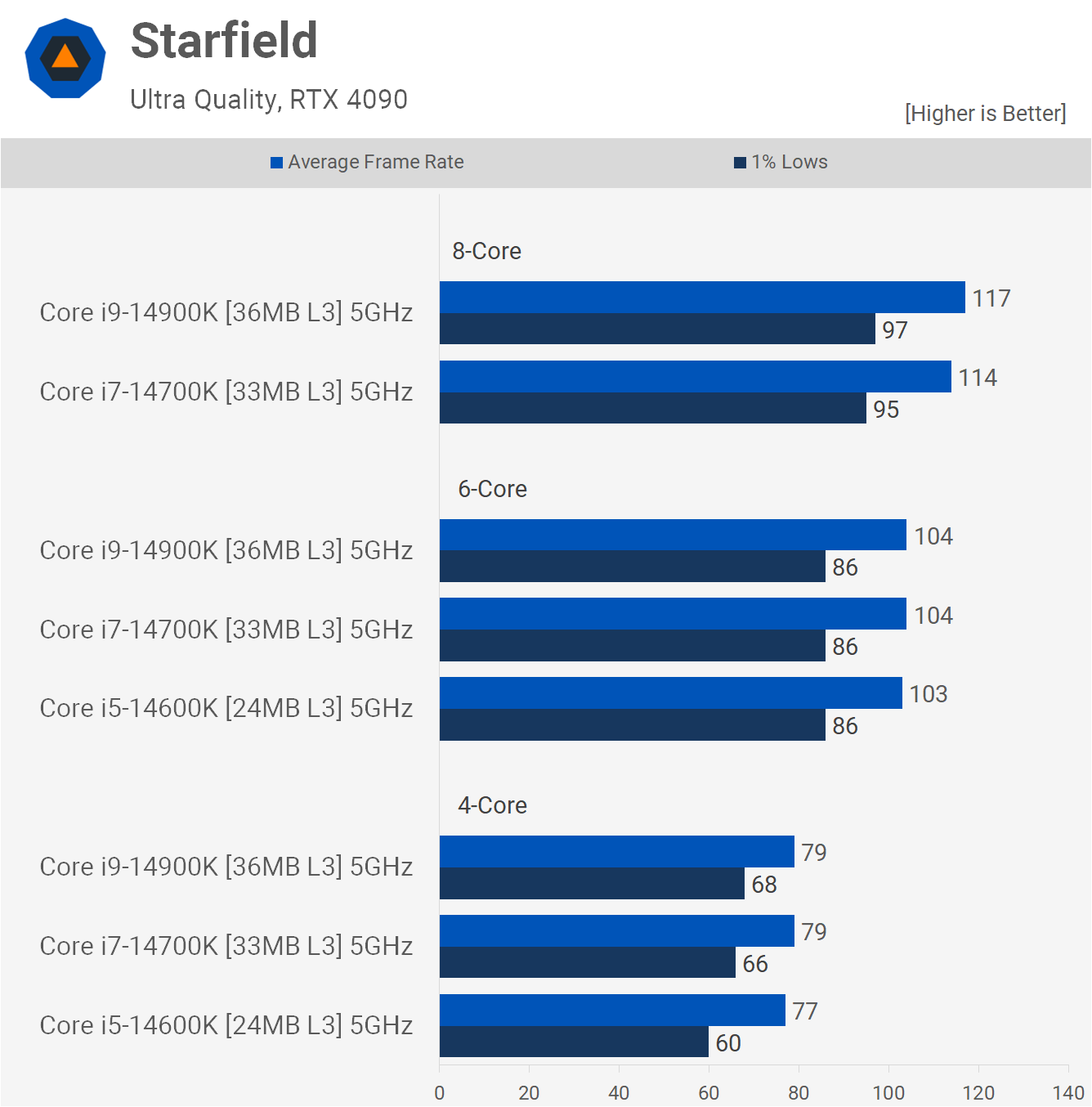

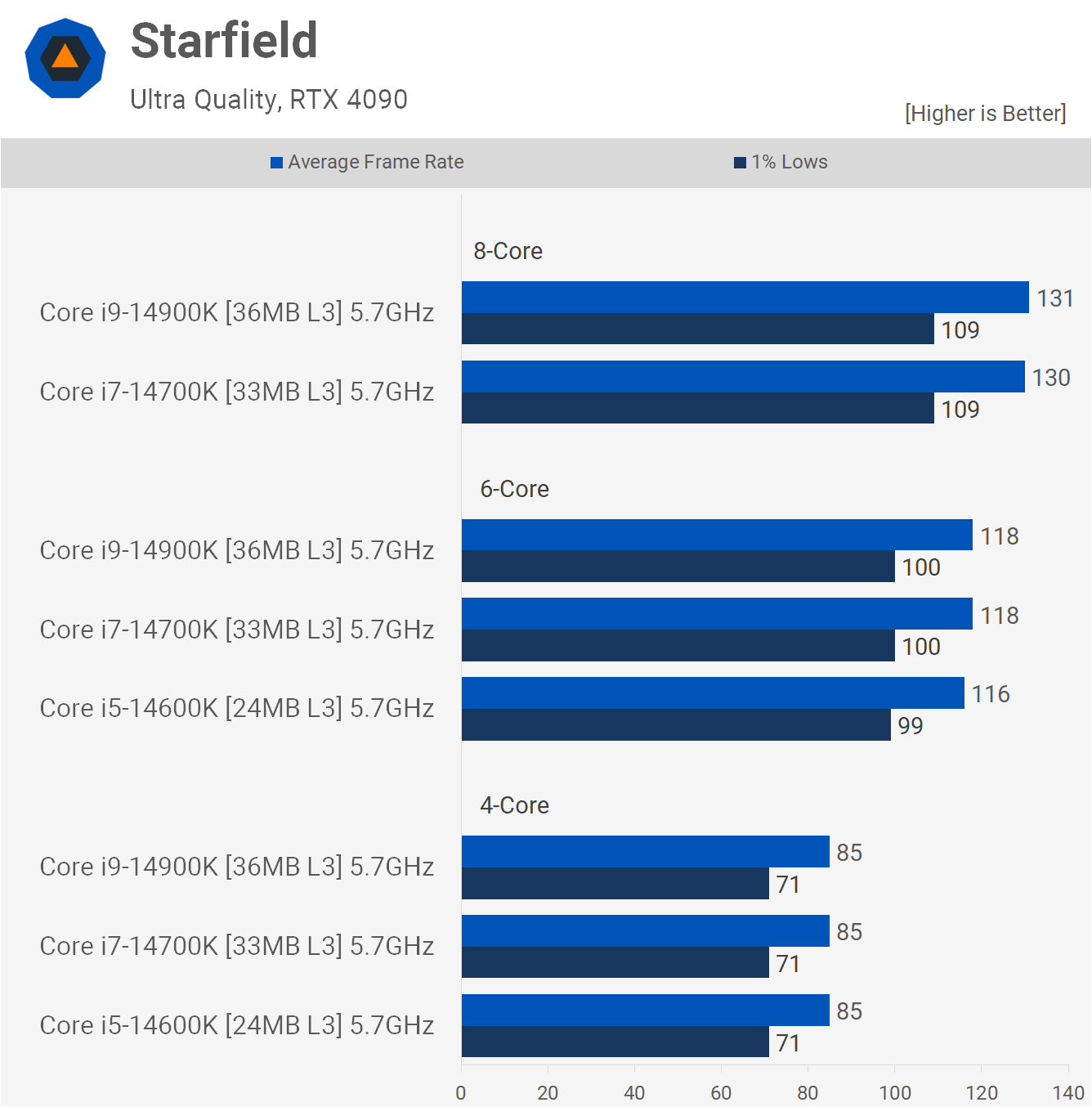

Starfield is another game that scales well with cores, and yet another example where the cache capacity has little to no impact on this testing. The 14900K saw an 11% performance reduction when going from 8 to 6 cores, and then a further 24% reduction from 6 to 4.

Overclocking at 5.7 GHz

Before wrapping up this testing, we went back and re-ran a few of these benchmarks with the cores clocked 14% higher at 5.7 GHz, along with a 33% increase to the Ring Bus at 4 GHz.

We did this because we anticipated that some extremely well-informed people out there would call the testing useless because 14th gen processors can clock higher, and 5 GHz is unrealistic. Despite already learning that the bottleneck is indeed clock frequency and, in some instances, core count, not cache capacity. So yes, clocking these parts higher will boost performance, but beyond that, it won't teach us anything new.

Anyway, having tested a few games, we found similar trends, so the limitation here for 14th gen really is clock speed. More cache doesn't appear to help.

What We Learned

We've got to say those results were quite surprising, as they're much different from the 10th-gen results we recorded three years ago. Back then, we found that, for the most part, when locked at the same frequency, there wasn't much difference between the Core i5, i7, and i9 processors in most games. And when there was a difference, it could largely be attributed to the L3 cache.

But with the 14th gen, the cache capacity doesn't appear to matter much. In most examples, 24 MB was enough. Oddly, an increased cache capacity often helped the most when fewer cores were active, though that wasn't always the case.

What made the most difference was the core count; the upgrade from 4 to 6 cores was often very significant, though none of these parts come with just 4 cores. There were also a few examples where going from 6 to 8 cores made quite a difference.

We can thus conclude from this data that adding 3D V-Cache to these Intel 14th-gen Core CPUs would likely be detrimental, only serving to reduce gaming performance (at least in today's games) which is surprising.

We also imagine that going to 10 or even 12 P-cores in today's games would only result in a very minor performance gain over 8 cores. So, for Intel, the only way forward with their current architecture is clock speed, which explains why things have gone the way they have, at least to this point.